Environmental Sound, Sonic Art, Physical Computing, Performance

Affective Pong Game

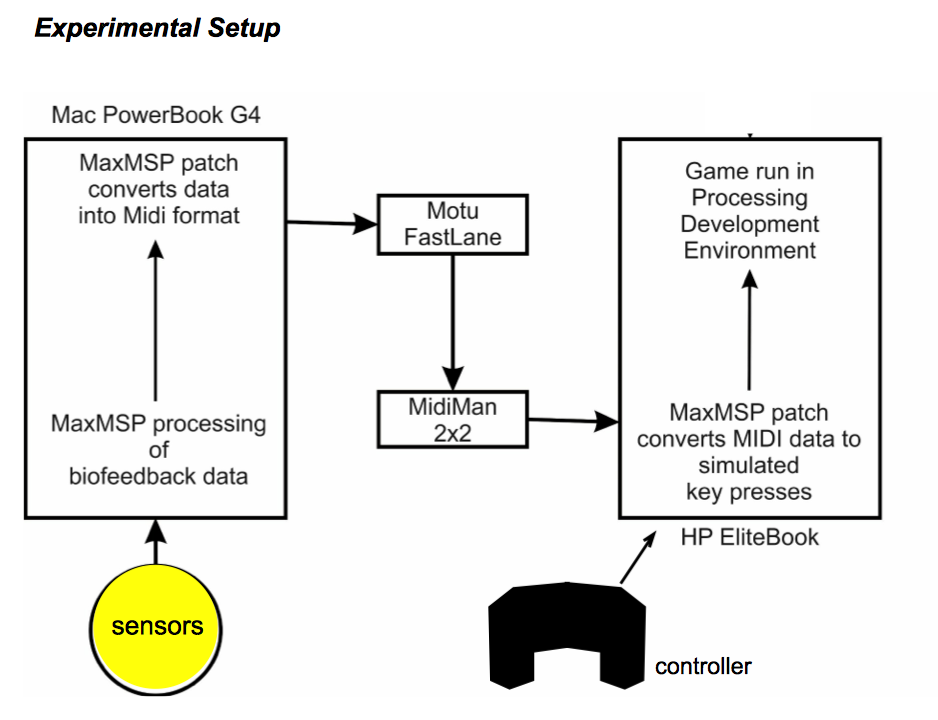

A research collaboration with Mark Palmer. Affective Pong is a video game (broadly based on the original Pong) which uses biofeedback sensors to explore players' involvement during spurs of gameplay.

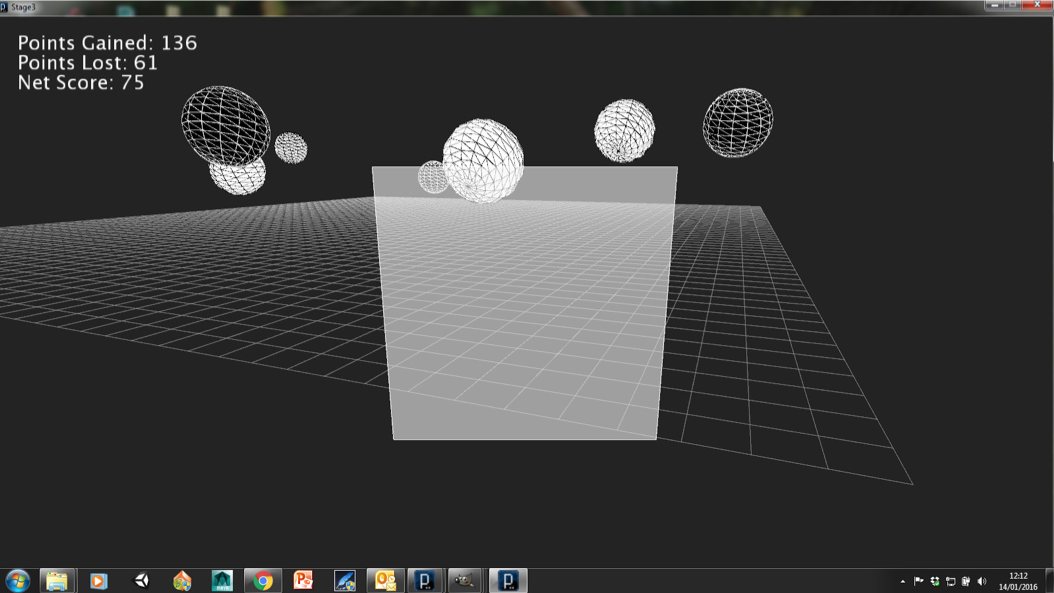

Screenshot of Affective Pong, © Mark Palmer 2014

A player has to hit the oncoming balls using the square bat displayed in the foreground. Bat and ball have to colour-match, otherwise no point is scored. The game starts off easy, but soon the number, speed and movements of the balls increase and players have to rise to the challenge to hit them all.

Research Summary

The project investigated whether and how sonified biofeedback could be used to create a greater sense of involvement for players in virtual spaces. In particular, the project explored how sonic representations of players’ affective states could be successful at that.

Affective Pong research took place at UWE Bristol between 2011 - 2015, involving iterative development of the game in response to feedback from players. A/B test sessions involved players of different backgrounds and abilities, and took the form of two gameplay runs of 7-10 minutes each. Each round was followed up by semi-structured interviewing (with special thanks to Lon Barfield).

Gameplay consisted of using an on-screen bat to hit approaching balls through four levels; balls and bat had to colour-match. Each level had a series of rounds, beginning with a single ball then adding a ball each round. Each round needed to be cleared before moving on to the next. Each level increased the complexity of the task. An on screen scoring system was provided to provoke players’ desire to succeed.

During testing sessions, project participants said that listening to the biofeedback sounds allowed them to feel more involved, motivated and immersed in the virtual space; which was expressed by statements like “feeling zoned into the game”:

“…even though it’s a quite simplistic game, because it’s got your own involvement as well as just the controls, it kind of affects how you feel for it.... “

Interestingly, the reasons for this experience were not always apparent to participants at the time, only becoming apparent afterwards when reflecting on the perceptions that precipitated these feelings. Players’ experiences and responses may have been at an emotional level inasmuch they were liminal, rather than overtly conscious events.

“…you’re never really, part of the game, except in obviously in the Kinnect and stuff, whereas this is actually you feel like you are a part of the game, the way that that’s you on the screen, your own, body function I suppose, that’s your heartbeat. I thought that [it] was... now I know, I had an inkling, but now I know, I think that’s really something…”

Insights from iterative game development and participant interviews evolved into a paper: Palmer M., & Palmer, M. (2017). Putting the Player in the Picture; Biofeedback and Embodied Affect, presented at Sounding Out the Space conference, Dublin 2017

Research Purpose

The problem faced by those developing games, whether for entertainment or educational purposes, is how to create engaging and inclusive forms of participation. Interaction is a two-way process and whilst there are many ways of feeding information about a virtual space to a user, the same cannot be said in reverse. Virtual environments predominantly respond to information from controllers (or cumulative data provided by the game state) but not the user’s affective states. This leads to a simplified and causal relationship, to the exclusion of aspects of human ‘behaviour’ that start sub-consciously, like affect and emotion.

Early on we realised the work had the capacity to tap into players’ affective state, as players said they could ‘hear [themselves] getting into trouble before they realized they were.’

Completed research demonstrated that hearing one’s own affective reactions, sonified via biofeedback sensors, can greatly improve one’s sense of being within a virtual space. Using sound in this way can allow developers to deliver a more embodied experience of virtual spaces.

Use of Sound

My contribution was to design the audio and to monitor players’ involvement in the game using biofeedback sensors (galvanic skin response, heart rate and heart rate variability). This allowed to gain a better understanding of patterns of involvement, especially when it became increasingly difficult for players to score points.

Game audio included a countdown each level (five consecutive beeps, the last one higher in pitch); also bouncing balls, changing the bat’s colour (swoosh), a perfect hit (harmonic chime), a partial hit when bat and ball were not colour-matched (dissonant note) and a miss (klaxon).

Heartbeat and GSR/HRV were sonified together and linked in with game event sounds (hits, misses). This was achieved by making use of the consonant and dissonant relationships between pitches within a given key:

The heartbeat was sonified as a ‘thump’, a low-pitched percussive sound with soft attack and short duration. This sound served as the ‘tonic’ (= tonal centre of a diatonic scale). Changes to GSR/HRV readings shifted the pitch of the heartbeat sound one step at a time and also changed the overall key, meaning that the ‘perfect hits’, set up in harmonic relation to the heartbeat, would pitch-shift along.

‘Partial hits’ were shorter in duration than ‘perfect hits’, with a slightly rising pitch, akin to a questioning tone as if communicating the unresolved nature of players’ actions. They were tuned to a major second, a dissonant relationship introducing tension, which was only eased by the next ‘perfect hit’ which brought the sounds back to a tonal centre. This rudimentary ‘falling melody line’, back to the tonic, would reward players who hit the balls correctly.

‘Miss’ sounds contained a higher percentage of noise and remained unrelated to the tonal centre. These sounds would break the harmonic sound experience and underline player mistakes.

Findings

With harmonic pitch relationships communicating success in the game (perfect hits) and disharmonic relationships failure (partial miss, missed balls), participants were generating their own audio story whilst playing the game.

It became possible to hear participants’ progress through the game and their reactions to events without reference to the screen. For example it was easy to pick up on changes in key indicating changes to tension and involvement, when the heartbeat sped up in anticipation of the next wave of balls or relaxing after balls were hit correctly. There was a noticeable difference amongst more ‘seasoned’ players who appeared to have a more measured reaction to events.

For some players, the harmonic biofeedback sounds blended into the background during gameplay. Some noticed them more during loading sequences or when gameplay was simple:

“it was most apparent at the start when the kind of heartbeat thing kicks in, and it’s like ooh! And then you sort of feel zoned into it…”

Some players noticed differences in the audio but only connected it with emotional stages after the game. As planned, players didn’t like hearing the ‘missed ball‘ sound. This made some more determined to catch the balls, to avoid hearing it again.